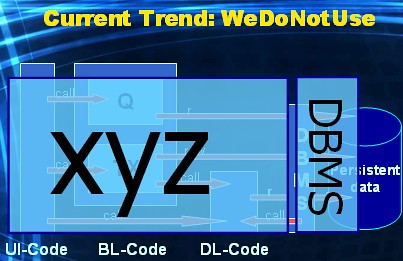

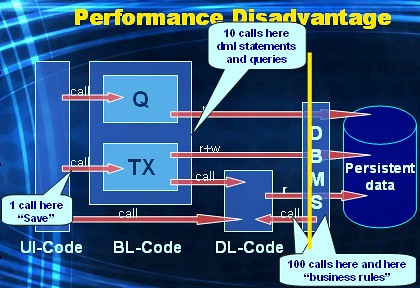

We have seen that the DBMS is the most stable component in everybodies technology landscape. We have also concluded that the DBMS has been designed to handle WoD application BL-code and DL-code. And current DBMS's are really good at this, if the underlying database design is a sound relational design and a good fit for the requirements of the WoD application. Now the latter part is up to the qualities of the person that designs the database. The former part is all about education.

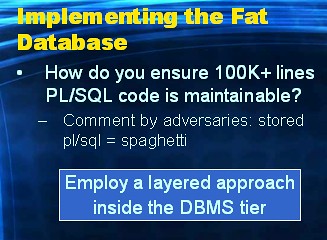

That's why I repeat here, that in order for the Helsinki approach to succeed, you'll require:

- Educated database designers

I specifically emphasize educated. You need to educate yourself to prevent fud when designing databases. Database design is all about set theory and predicate logic. Again this is what Ted Codd gave us. There are plenty of books by Chris Date to read and so to educate yourself in this area. I can of course also recommend that you study Lex' and mine book. - Experienced plsql developers

I specifically emphasize experienced since I see that many plsql developers are still stuck in the mid-nineties. They have not evolved their knowledge of the DBMS, which is in the case of Oracle is now at it's 11th major release. Compared to Oracle7 (of the mid-nineties) a lot has been added, and a lot has been improved. Many then-Myths, are no longer Myth now.

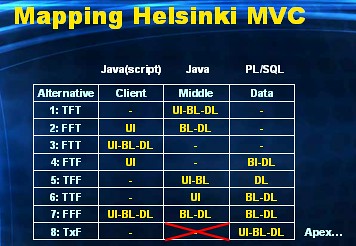

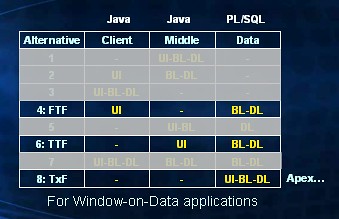

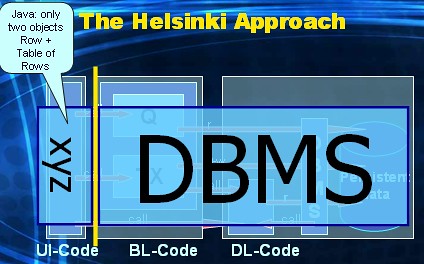

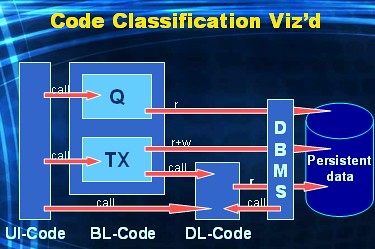

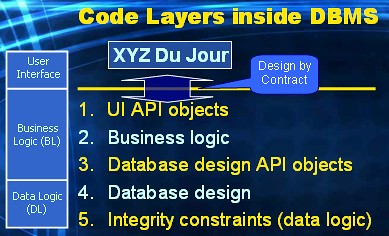

In this layered approach we materialize the WoD code classification that I have introduced: UI-logic (often outside the DBMS), BL-logic, and DL-logic. But we also introduce a separate layer, called UI API-objects, between the UI and BL code layers, and a separate layer between the BL code and the tables in the database design (database design API objects).

Layer 1: UI API objects

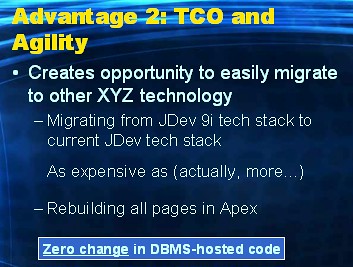

In the Helsinki declaration you need to introduce API objects inside the DBMS whose specific purpose is to be called by UI-code. These, and only these, objects will be the entry points, or API if you like, for UI-code to communicate with the DBMS. Per WoD-page you need to design these API objects. Every object in this layer is specific for some page of the WoD application. This layer is a very important layer. Why? Because these (and only these) objects might require re-engineering in case you switch to a new yafet. This layer will give you agility and enable you to easily take on new yafets every so many years. I'll give examples of how to design this layer in future posts.

Layer 2: Business logic

Nothing new here. This is the materialization of BL-code

Layer 3: Database design API objects

In this layer you implement "data services" that are shared by multiple BL-code modules. Whenever you are writing BL, and you see yourself copy-pasting or rewriting a piece rBL or wBL code that you have written before, you need to introduce a database design API object for it. Write it once, and reuse it in different BL-code sections. This layer is somewhat less important, but often found inside your software architecture.

Layer 4: Database design

This layer is sort of pro-forma. It only holds the tables (and indexes) that are part of the database design for the WoD application.

Layer 5: Data logic

This is the materialization of DL-code. Also a very important layer. The reason why data logic is visualized beneath the database design (layer 4), has to do with how Helsinki implements data logic. We'll use database triggers to implement data logic.

And now you say: "Oh no! Triggers? Toon are you serious? Tom Kyte says all triggers are evil, therefor I cannot and will not use them. "

Let's have a brief intermezzo on this topic.

The reason why Oracle rockstar Kyte is against triggers is (from what I understand) because they:

- make things happen automagically, and

- are rarely implemented correctly.

Listen.

Tom's reason 1 is due to people implementing triggers that hold wBL (write-BL) code. And I fully agree on this point: when you put wBL code in your table triggers, and you then perform dml against the table, things happen automagically. The wBL-code will in its turn perform more dml that you, the issuer of the dml against the table, might not have realized. And if there are many tables with triggers of this kind, then a cascading effect will take place, making more magic happen (which is worse). wBL code should never be executed via triggers.

<related observation>

This automagic behaviour is also why I never use cascade-delete on foreign keys, nor the default clause on columns of tables, nor the fixed-length CHAR datatype. They introduce magic when parent rows are deleted, when a (partial) row is inserted, or a less-than-the-fixed-length value is provided.

</related observation>

Tom's reason 2 is due to people implementing DL-code in triggers, and thinking they have done it right. Implementing DL-code is inherently complex matter. It is complex due to concurrency issues that need to be solved, and due to efficiency levels you would want to have (DL-code shouldn't validate a constraint when the transaction at hand doesn't necessitate it). In chapter 11 (of the book), I fully explain the issues that need to be solved when implementing DL-code. Also, a few years ago, I have demonstrated an example constraint implementation using triggers on Tom's blog. You can find it here. The point I want to make is that just because implementing DL-code via triggers is complex, doesn't mean triggers are evil and it shouldn't be done. To me it means that you need to educate yourself so that you can use triggers correctly. By the way: implementing DL-code not via triggers, but embedded inside wBL-code sections, is complex too. Maybe slightly less complex, but still complex. Triggers have the big advantage that they offer a once-and-for-all type of implementation. Having to always go through a wBL-code section (TAPI's, VAPI's, or whatever you would like to call them), is just not good enough for me.

<related observation>

I'd like to give you one closing thought here. Suppose, just suppose, Oracle would have supported SQL's CREATE ASSERTION statement, thereby providing you with a means to implement all constrainsts declaratively. Just like FK's and PK's are declarative means of implementing a constraint. You would not have to write a single line of DL-code: Oracle accepts your assertion and deduces (computes) when and how the constraint is best validated. Would you use ASSERTIONS? I think yes. You're using PK's and FK's now too, aren't you? And the way declarative constraints are validated "under the hood", is conceptually the same as you building triggers that hold DL-code: at the appropiate moments per row, per statement, Oracle fires the necessary DL-code that's part of the DBMS to validate the constraint.

</related observation>

End of intermezzo.

I want to emphasize that layer 1 (UI API objects) and layer 5 (DL code) need to be separated from the BL and DB-design API layers. Again this all boils down to BL being "the rest". Implementing DL separately, and introducing the design-by-contract UI API layer, will ensure that "the rest" becomes maintainable (thus addressing the Helsinki adversaries' main argument).

Preferably I would use a separate schema inside the database to hold the UI-API objects: I often refer to this schema as the FE (FrontEnd) schema. The yafet would connect to the database with yet another schema: one that only as CREATE SESSION system privilege and all necessary object-privileges on the UI-API objects inside the dedicated FE-schema.

In the next post I will demonstrate the Helsinki code layers with an example database design, some constraints, and an example Window-on-Data page on top of that design.

Hopefully that will be posted in less than two weeks this time, but I do have a real life and a real job next to this blogging too :-).